INTERPRETING QUALITY: GLOBAL PROFESSIONAL STANDARDS?

Franz Pöchhacker, University of Vienna.

Published in: Ren W. (ed.), Interpreting in the Age of Globalization: Proceedings of the 8th National Conference and International Forum on Interpreting (pp. 305-318) Beijing: Foreign Language Teaching and Research Press, 2012.

Abstract: The complex and multidimensional notion of quality is addressed here from the perspective of the professional service providers. With Bühler’s pioneering survey among conference interpreters serving as the point of departure, the importance of various quality criteria is investigated on the basis of a worldwide web-based survey conducted in the context of a larger research project at the University of Vienna. The findings, which point to a stable pattern of preferences, are discussed with regard to their generalizability on a global scale, with special reference to China and Chinese.

1. INTRODUCTION

Ever since the profession of conference interpreting emerged on an international scale in the mid-twentieth century, the concept of quality has been a major concern in professional practice and training. Nevertheless, it was not until the 1980s that the topic of quality came to be addressed explicitly and on the basis of systematic investigation. A key role in this endeavor fell to AIIC, the International Association of Conference Interpreters founded in 1953, whose strict requirements for the admission of new members, in conjunction with a language classification scheme and detailed specifications for appropriate working conditions, served as an early paragon for quality assurance in the profession.

In a brochure addressed to would-be conference interpreters, AIIC (1982) referred to quality as “that elusive something which everyone recognises but no one can successfully define.” The ability to recognize quality was indeed demanded of AIIC members when asked to assess the performance of candidates for membership, and it was in this context that the challenge of defining quality was taken up by Hildegund Bühler in a pioneering empirical study.

The present paper reviews that seminal piece of research and reports on an effort to replicate it on a worldwide scale using state-of-the-art survey techniques. Special emphasis will be given to methodological issues as well as to the question raised in the title of this paper, that is: to what extent can the findings from the international survey be taken to reflect “global standards” for the relative importance of the performance quality criteria under study? In other words, can a global survey approach do justice to socio-culturally specific aspects of the phenomenon under study – or is there a need to take account of what we might call “interpreting quality with Chinese characteristics”?

2. CRITERIA FOR QUALITY IN INTERPRETING

The assumption that quality in interpreting is not a monolithic concept but involves more than one component can be traced back to Jean Herbert (1952), who mentioned accuracy and style as the two main concerns, suggesting that interpreters were sometimes faced with a choice between these two. Furthermore, he pointed to the role of such factors as grammar, fluency, voice quality and intonation in an interpreter’s performance. For decades, though, the relative importance of these and other criteria remained unclear.

It is widely known that the first scholar who sought to collect empirical data on the various factors that play a role in the evaluation of conference interpreting was Hildegund Bühler, an interpreter by training who spent most of her career as a scholar in the field of terminology and taught translation and translation theory at the University of Vienna. Married to an active conference interpreter, she took a special interest in the profession and conducted several studies on aspects of a conference interpreter’s work. In a pioneering effort, Bühler (1986) surveyed members of AIIC about the criteria they presumably applied when assessing the quality of an interpreter and his or her performance. For this purpose she drew up a list of 16 criteria, distinguishing between linguistic-semantic and extra-linguistic factors. The former included “native accent”, “fluency of delivery”, “logical cohesion of utterance”, “sense consistency with original message”, “completeness of interpretation”, “correct grammatical usage”, “use of correct terminology” and “use of appropriate style”, and the latter “pleasant voice”, “thorough preparation of conference documents”, “endurance”, “poise”, “pleasant appearance”, “reliability”, “ability to work in a team” and “positive feedback of delegates”.

As evident from some of the criteria in the second group, such as poise and appearance, Bühler envisaged an assessment of interpreters and interpreting in the consecutive as well as the simultaneous mode, and sought to cover such behavioral aspects as preparation, reliability and teamwork. On her one-page questionnaire, the list of 16 items was to be rated by respondents on a four-point ordinal scale ranging from “highly important” and “important” to “less important” and “irrelevant”. Responses were collected (at an AIIC Council meeting and international symposium in Brussels in 1984) from 41 AIIC members. In addition, six members of the association’s Committee on Admissions and Language Classification (CACL) filled in the questionnaire.

The most highly rated criterion by far is “sense consistency with original message”, which relates to the arguably crucial idea of source-target correspondence, but without making explicit reference to such controversial concepts as equivalence or faithfulness. Rather, Bühler’s use of the terms “sense” and “message” points to levels of meaning beyond the linguistic surface, as foregrounded by Danica Seleskovitch in her “théorie du sens” (e.g. 1977). The criterion of “sense consistency” with the original could therefore be expected to be embraced without any reservations by the conference interpreting community. Also related to these ideas is the second-ranking criterion, “logical cohesion”, which captures the requirement for the interpreter’s output to “make sense” to the audience.

Somewhat surprisingly perhaps, all other output-related aspects of performance quality were deemed less relevant by Bühler’s respondents than behavioral qualities such as reliability and thorough preparation, which were considered highly important by four fifths and nearly three quarters of respondents, respectively. Only half the respondents, in contrast, gave the highest rating to factors like correct terminology and grammar, fluency and, interestingly, completeness, with paraverbal characteristics such as voice, native accent and style appearing further down in the list.

Bühler’s seminal study proved highly influential in stimulating further surveys among interpreters and, in particular, end-users (e.g. Kurz 1993). Nevertheless, the limitations of Bühler’s small-scale study seem all too clear. Most critically, it is not known how her sample of 47 AIIC members was constituted, so it is not possible to generalize the findings to the total population. And since Bühler’s questionnaire contained no items eliciting demographic background information, nothing is known about the age, gender, working experience or language combination of this group of conference interpreters.

Some of these shortcomings were redressed in the first follow-up study on interpreters’ quality criteria, which was not conducted until some one-and-a-half decades later. Using a web-based rather than a paper-and-pencil questionnaire, Chiaro and Nocella (2004) surveyed interpreters throughout the world for whom they collected a range of demographic data, including age, gender, education, interpreting experience, geographic region and employment status. Their sample of 286 respondents was 71% female, with a mean age of 45 years and an average of 16 years experience in interpreting. Forty-four percent of the respondents were born in Western European countries, and as many had a degree in interpreting. Rather surprisingly, most of the interpreters in the sample (46%) came from the Americas, which may suggest that Chiaro and Nocella (2004) targeted professionals beyond the field of conference interpreting, the main centers of which have traditionally been in Europe. Indeed, their statement that they sent out “about 1000 invitations … to interpreters belonging to several professional associations” indicates that their target population was both smaller than the full membership of AIIC and broader in terms of affiliation. Unfortunately, the authors do not specify which professional associations their respondents were affiliated with, nor do they state explicitly whether AIIC was among them. It is therefore largely unclear to what extent the AIIC members in Bühler’s study can be compared to the interpreter sample accessed by Chiaro and Nocella.

Another problem with comparability arises from the difference in the tasks the researchers expected their respondents to perform. Whereas Bühler used a rating task for each of her sixteen criteria, Chiaro and Nocella, while using a largely similar list of items, had their respondents establish a ranking, from most important to least important. What motivated this change in research design was the view that Bühler’s respondents had proved “incapable of discriminating and were giving equal importance to all the criteria” (Chiaro & Nocella 2004: 283). While this contention seems somewhat overstated, given the sequence reflected in Figure 1, it does apply to the four or five middle-ground criteria (terminology, fluency, grammar, etc.), all of which were rated as “highly important” and as “important” by nearly half the respondents.

Chiaro and Nocella therefore had their respondents perform two ranking tasks, one for the set of nine “linguistic criteria” and one for a set of eight “extra-linguistic criteria” that differed considerably from Bühler’s original list. The results for the former are shown in Table 1, juxtaposed with an ordered list based on the percentages for “highly important” and “important” in Bühler’s study.

Table 1: Comparative Ranking of Quality Criteria

-

Chiaro & Nocella (2004)

Bühler (1986)

- consistency with the original

sense consistency with original message - completeness of information

logical cohesion of utterance - logical cohesion

use of correct terminology - fluency of delivery

fluency of delivery - correct grammatical usage

correct grammatical usage - correct terminology

completeness of interpretation - appropriate style

pleasant voice - pleasant voice

native accent - native accent

appropriate style

Notwithstanding the comparability issues arising from the different tasks (and also, perhaps, from the reformulation of some of the criteria), the most striking difference between the two lists of priorities clearly concerns the criterion of “completeness”, which ranks second in the study by Chiaro and Nocella and only in sixth place according to the ratings collected by Bühler. Another significant discrepancy is seen for the importance of correct terminology, which received the third-highest ratings from Bühler’s AIIC interpreters and was ranked only sixth by those filling in Chiaro and Nocella’s web-based questionnaire.

In the face of such diverging results, and the dearth of empirical findings regarding professional interpreters’ quality-related preferences in general, there is an obvious need for further research. In an effort to respond to this need, we conducted a comprehensive survey as part of a larger research project on “Quality in Simultaneous Interpreting” carried out at the University of Vienna.

3. AIIC SURVEY

The “Survey on Quality and Role”, which was carried out among AIIC members in late 2008 (Zwischenberger & Pöchhacker 2010), combines the need for replication with the desire for innovation. With regard to the former, it was decided to follow Bühler’s choice and target AIIC as the most comprehensive professional association of conference interpreters in the world. In the interest of comparability, we also adopted Bühler’s original criteria and kept her rating task, though using more consistent wording for the four response options. The focus of our overall project (on simultaneous interpreting) and the tradition of user-expectation surveys using only output-related criteria, led us to concentrate on Bühler’s “linguistic” criteria, plus voice quality, as included in the list since Kurz (1993). Taking note of research on quality expectations published over the years, we extended the original list of criteria to include “lively intonation”, as studied in particular by Ángela Collados Aís (1998) at the University of Granada, and “synchronicity”, which had emerged as a feature expected of simultaneous interpreting by respondents in the AIIC-sponsored user expectation survey carried out by Moser (1996).

Aside from these additional criteria, and a set of follow-up questions concerning the potential variability of quality-related preferences depending on the type of assignment or meeting, the crucial innovation in this survey project was the use of a state-of-the-art approach to questionnaire administration and data collection. Like Chiaro and Nocella, we used a web-based questionnaire; unlike these pioneers, however, we were able to benefit from user-friendly software available from the open-source community.

3.1 Survey Methodology

Online surveys using web-based questionnaires emerged in the early 1990s, and a number of tools are now available which allow non-experts to design and administer surveys of one kind or another. One of the best-known providers is SurveyMonkey, a US company founded in 1999 that offers a basic version of their survey tool for free. A word of caution must be sounded, though, as some of these readily available tools do not allow the survey administrator full control over the data and the survey population. Most critically, access to the survey instruments is often provided by a link (URL) that can freely be disseminated and allows anyone to participate. This can obviously undermine the integrity of the data and thus the validity of the findings.

For our survey we therefore opted for an application that ensures controlled access as well as full autonomy in the handling of data. The software is called LimeSurvey and has been developed in the open-source community since 2003 (when it was created under the name of PHPSurveyor by Australian software developer Jason Cleeland). As its original name suggests, it uses PHP as the programming language, in combination with MySQL, a relational database management system. This software can be downloaded and installed on a server, if available. In our case, the survey application was hosted in-house on our own server at the Center for Translation Studies.

The software application has two main components: a questionnaire generator tool and a survey administration tool which, in turn, runs two separate databases – one with the e-mail addresses of potential participants, and the other with their responses. Since the two databases are not linked, the system guarantees full anonymity of the responses, whereas it allows the administrator to monitor whether a response has been received from a given e-mail address in the database. If not, the system can be used to send a reminder to those addresses from which no response has been received. For each entry in the database of addresses, the system generates a unique access token (password), which can be used only once to complete the survey. This makes it impossible to complete the survey more than once (not a major concern in our case) or to share the link with persons beyond the defined survey population.

Clearly, then, it is essential to have a defined survey population and an e-mail address for each of its members. Ten or twenty years ago, this requirement made it highly questionable whether a web-based approach could ever yield a representative sample. And even now, a web-based survey instrument will obviously reach only those who have and use e-mail.

This was also a slight limitation in our case, as some members of AIIC prefer, for whatever reason, not to have their e-mail addresses listed. Since our survey was not an AIIC-sponsored initiative, we compiled our list of e-mail addresses from the Association’s membership directory for the year 2008, ending up with over 2,500 entries. This made for an excellent sampling frame, but sampling as such would again have involved some tricky issues, such as aiming for a balanced representation with regard to regions, working languages and employment status. We therefore avoided sampling altogether and opted for a survey of the full population; that is, e-mail invitations to participate in the web-based survey went out to all 2,523 addresses in our database. All but a few members of our target group have English as a working language, so the bias of using an English-language questionnaire for respondents throughout the world should be very small to negligible.

The survey instrument as such, developed in a painstaking process within our project team, was comprised of three parts. One of them (Part C) focused on the issue of conference interpreters’ self-perception of their role. While certainly relevant and related to the issue of quality, this part of the study is discussed elsewhere. Another part (Part A) elicited demographic and socio- professional information, including employment status, AIIC region, age, gender and working experience. Part B of the questionnaire was devoted to the relative importance of quality criteria and essentially consisted of an array-type item listing the eleven criteria and offering four response options (“very important”, “important”, “less important”, “unimportant”) as well as a “no answer” option for each criterion.

3.2 Survey Findings

A total of 704 AIIC members worldwide participated in the survey, which was active for 7 weeks from late September to early November 2008. This highly satisfactory response rate (28.5%) gave us a sample that, in many ways, closely matches the profile of AIIC members in general. Thus, the average respondent in our survey is 52 years old and has been in the profession for 24 years. By the same token, the male–female ratio of 1 : 3 (76% women) is as typical of the overall membership structure as the predominance of freelancers, who make up 89% of our sample.

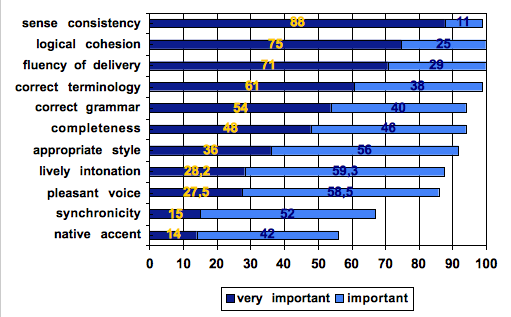

The ratings (“very important”, “important”) for the eleven criteria are summarized in Figure 1.

Figure 1: Ratings of Quality Criteria (n=704)

The findings shown in Figure 1 reflect a distinct pattern of priorities. In part, the sequence of criteria matches that found in previous studies, especially the user expectation surveys initiated by Kurz (1993). This concerns the two top-rated criteria, “sense consistency with original message” and “logical cohesion of utterance”, and the criteria that generally attract the lowest ratings of relative importance, such as “pleasant voice” and “native accent”. Much more so than Bühler’s study, our survey also yields a clear order of priorities for the four output-related criteria that are sandwiched between the ones at the top and at the bottom of the list: fluency ranks as an undisputed number 3, followed by the correct use of terminology and grammar, with completeness ranking even lower. This is in line with Bühler’s findings, but – again – in stark contrast with those of Chiaro and Nocella, whose ranking had completeness as the second most important criterion of quality. The reasons for this discrepancy may have to do with the composition of the survey population in Chiaro and Nocella’s study: Given the sizeable share of interpreters in the Americas, completeness may have been valued more highly because these respondents would likely be working also in court and other legal settings. However, in the absence of more detailed information on the respondent profile, such speculation is impossible to substantiate.

With regard to the top three quality criteria in the opinion of AIIC members, our survey yields a clear result: While “sense consistency” and “logical cohesion” remain undisputed at the top, “use of correct terminology” is substituted in third place by “fluency of delivery”. Differences between our results and those of Bühler (1986) arise mainly at the lower end of the list, where “appropriate style” receives higher ratings than “pleasant voice” and “native accent”. The ratings for the newly introduced criterion of “lively intonation” closely match those for “pleasant voice”, which may be a sign of conceptual overlap, even though care had been taken to avoid that by placing intonation well ahead of voice quality in the list.

In all, the views of AIIC members concerning the relative importance of output-related aspects of quality have been shown by our survey to be relatively stable. While our replication of Bühler’s study has shown the pattern of priorities to be largely consistent over time, the comprehensiveness of our full-population survey, which reached experienced conference interpreters in AIIC “regions” throughout the world, could also lead us to claim broad geographical coverage and thus a high degree of consistency on a global scale. It is here, though, that our critical reflection, as suggested by the question mark in the title of this paper, must begin. For there are several issues that make it doubtful whether these ostensibly robust survey findings can be considered “global”.

4. GLOBAL STANDARDS AND CRITERIA?

In raising a few problematic issues, I should begin by acknowledging that the term “standards” is rather broad and potentially misleading; what I mean here specifically is the pattern of more or less relevant output-related quality criteria for simultaneous interpreting as seen from the perspective of the providers of that service – the perspective of “the profession”, for short.

And how global is that profession? Clearly, conference interpreting can be considered an early example of a truly international twentieth-century profession. This is reflected in the membership of AIIC, which is not initially based on a territorial principle but open to practitioners in any country, whatever their choice of professional domicile. It is an international association of individuals from all over the world that has grown from a few dozen members in 1953 to nearly 3,000 in nearly 100 countries today.

This coverage is impressive, and yet we need to be cautious when extrapolating from an AIIC survey to the interpreting profession worldwide. One reason is that even with close to 3,000 members, AIIC by no means includes every professional conference interpreter in the world. According to the AIIC website, there are 29 members in China. By comparison, there are 73 in Austria, my tiny home country with roughly 8.4 million inhabitants.

What is worse, it is not even entirely clear how a “conference interpreter” is to be defined. Some would place the emphasis on university-level training (which would be problematic for countries with a different interpreter-training tradition, such as Japan); others might focus on proficiency in both of the main working modes (consecutive as well as simultaneous interpreting), and others again could focus their definition on the setting in which these interpreters work, that is, conferences – or (as a 1984 working definition proposed by AIIC would have it) “conference-like situations”. In defining an interpreter, we would probably want to use all of the above features, but none of them may be “necessary and sufficient” to set hard-and-fast boundaries for the concept of a conference interpreter.

With regard to settings and fields of work, we must also acknowledge that the profession of conference interpreting is stratified, or that there are different markets, with a high-end including the multilingual international organizations (known as the AIIC Agreement sector) and a more local market in which assignments typically involve bidirectional interpreting. Where this is done in consecutive, it becomes difficult to distinguish conference from liaison interpreting if one does not go by the number of participants in the interaction – or by the fee.

Mindful that we may only have captured a certain – though undisputably relevant – segment of the overall population of conference interpreters in our survey, we are complementing our work by satellite surveys in various national contexts, including the Czech Republic, Germany, Italy and Poland. By surveying the members of national interpreter associations in their (A) language, we are hoping to see whether the AIIC findings will be corroborated for the more national conference interpreting markets. In one of these country-based surveys, 107 members of the German Association of Conference Interpreters (VKD), out of the 323 who had received an invitation to participate, indicated their quality-related preferences along largely similar lines as the AIIC population (see Zwischenberger 2011). The four top-rated criteria remained the same, but “completeness” exchanged places with “correct grammar”. The latter may have to do with the types of meetings in which the respondents usually work: completeness may seem more important in highly technical specialist conferences and negotiations, whereas standards of grammatical correctness may be somewhat lower among interpreters who typically work also into their B language.

The conclusion to be drawn from these findings for the Chinese context is clear: In order to find out about the views on quality held by Chinese conference interpreters, a survey of this kind would be needed. We cannot claim that our AIIC Survey, which included only eleven respondents who indicated Mandarin as their A language, covers conference interpreting in China.

With a view to such a survey, two fundamental design issues need to be considered: One is how to define the population – and to access all or a random sample of its members by e-mail; and the other concerns the criteria to be evaluated. The first problem was tackled in a survey study by Setton and Guo (2009), who were keenly aware of the difficulty, if not impossibility, of drawing a representative sample of the country’s population of professional interpreters (and translators, for that matter). For example, the 62 respondents in their study, which centered on Shanghai and Taipei and included only 27 who mainly worked in interpreting rather than translation, had an average age of 35 years (compared to 52 in the AIIC sample). What is more, over 80% of the respondents were not affiliated with any professional association. Most of the respondents were reached via lists of alumni of interpreter training programs and lists used by recruiters.

No less challenging than defining and accessing the population is the issue of the criteria to be used in the survey instrument. They should presumably be offered to respondents in Mandarin, so they would need to be translated. As acknowledged by Bühler (1986) and investigated in depth by the Granada team in so-called “contextualization studies”, in which they asked respondents about their understanding of a given criterion (and found widely diverging interpretations), the criteria themselves are poorly defined. What is more, some of them, such as intonation, may play a different role in a tonal language like Mandarin Chinese. Indeed, linguists formerly held that Chinese had no intonation to speak of. While this is no longer the case (e.g. Kratochvil 1998), the specifics of intonational patterns and functions have yet to be fully understood – and applied to research in interpreting studies.

All this brings me to a mixed conclusion. Despite advances in technology which have enabled us to carry out a “global” survey on quality criteria, our findings, though seemingly robust, are still patchy, and I have tried to point out why filling in the picture for China is a difficult challenge. On the other hand, the conference interpreting community in China has been developing fast (cf. Setton 2011), and the same holds true for the community of interpreting scholars, as evident from the highly successful National Conference series. There clearly is ample reason to assume that state-of-the-art survey research of the type presented in this paper will soon be conducted in China on the issue of quality and other topics, extending and deepening our understanding of conference interpreting as a global profession.

REFERENCES

AIIC (1982) “Practical guide for professional interpreters”. Geneva: International Association of Conference Interpreters.

Bühler, Hildegund (1986) “Linguistic (semantic) and extra-linguistic (pragmatic) criteria for the evaluation of conference interpretation and interpreters”. Multilingua, 5 (4): 231-235.

Chiaro, Delia & Nocella, Giuseppe (2004) “Interpreters’ perception of linguistic and non-linguistic factors affecting quality: A survey through the World Wide Web”. Meta, 49 (2): 278-293

Collados Aís, Ángela (1998/2002) “Quality assessment in simultaneous interpreting: The importance of nonverbal communication”. In F. Pöchhacker & M. Shlesinger (eds.) The Interpreting Studies Reader. London/New York, Routledge, 327-336.

Herbert, Jean (1952) The Interpreter’s Handbook: How to Become a Conference Interpreter. Geneva: Georg.

Kratochvil, Paul (1998) “Intonation in Beijing Chinese”. In D. Hirst & A. Di Cristo (eds.) Intonation Systems: A Survey of Twenty Languages. Cambridge: CUP, 417-431.

Kurz, Ingrid (1993/2002) “Conference interpretation: Expectations of different user groups”. In F. Pöchhacker & M. Shlesinger (eds.) The Interpreting Studies Reader. London/New York, Routledge, 313-324.

Moser, Peter (1996) “Expectations of users of conference interpretation”. Interpreting, 1 (2): 145-178.

Seleskovitch, Danica (1977) “Take care of the sense and the sounds will take care of themselves or why interpreting is not tantamount to translating languages”. The Incorporated Linguist, 15: 7-33.

Setton, Robin (ed.) (2009) China and Chinese. Special Issue of Interpreting, 11 (2).

Setton, Robin & Guo, Alice Liangliang (2009) “Attitudes to role, status and professional identity in interpreters and translators with Chinese in Shanghai and Taipei”. Translation and Interpreting Studies, 4 (2): 210-238.

Zwischenberger, Cornelia (in press) “Quality criteria in simultaneous interpreting: An international vs. a national view”. The Interpreters’ Newsletter, 15 (2011).

Zwischenberger, Cornelia & Pöchhacker, Franz (2010) “Survey on quality and role: Conference interpreters’ expectations and self-perceptions”. Communicate! Spring 2010. http://www.aiic.net/ViewPage.cfm/article2510.htm (accessed 25 January 2011).

3

[…] La evaluación de la interpretación es un tema que da para mucho, y hay entradas con propuestas muy interesantes en la blogosfera, como la adaptación de las escalas de Carroll que plantea Elisabet Tiselius. A pesar de la subjetividad del enfoque por componentes, en un contexto de tribunal, el consenso suele ser más fácil, ya que los miembros son intérpretes profesionales o formadores que comparten la definición de «apto». En el contexto académico, existe un ente etéreo llamado «calidad», que garantiza el «apto». Y aquí me refiero a la calidad entendida como «that elusive something which everyone recognises but no one can successfully define», que menciona Franz Pöchhacker en una entrada interesantísima del blog indispensable A word in your ear. […]